Switching from precise HLR to discrete HLR

Motivation

We observed in the previous series that HLR is quite a heavy thing, especially the "precise" one. To test things out, we invented a "boxy HLR view," which can right away show us the HLR results without waiting for them to appear in a technical drawing. In what follows, we will use the following terms:

- HLR for the precise algorithm, or "hidden line removal" in its common sense (this should be clear from the context).

- DHLR for the discrete algorithm.

What's written below is relevant for OpenCascade ver.7.6. This version of the kernel is probably not the latest one, but, believe me, nothing has changed in the HLR logic to seek out any gems in the master branch. This algorithm has been developed over decades, slowly but surely, so slowly that a couple of minor releases is nothing for this kind of tool.

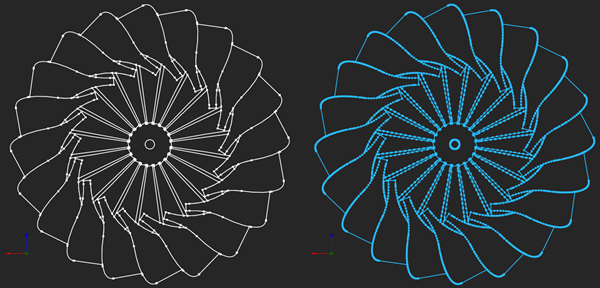

Originally, the HLR algorithm performs exact projection with the goal of creating analytical representations of curves for projected edges. Discrete variation (DHLR) begins with a faceted representation of geometry; hence, its accuracy is dependent on mesh precision. The visual flaws of DHLR become clear if we keep vertices displayed in the projection result:

|

Precise HLR (on the left) vs discrete HLR (on the right). All vertices are displayed, so you can see that DHLR effectively returns polylines. |

Precise HLR generates visually appealing, clean projections that are ready for placement into technical drawings. Possible flaws are never severe, and the only issue with this algorithm is its poor performance. DHLR was developed as a faster alternative to the exact algorithm; however, the projected images it produces are notably less healthy. Let's look at some numbers to ground the subsequent conversation about performance.

To compare the performance of HLR with DHLR, I used a number of CAD files containing milled, turned, and sheet metal parts of moderate complexity. The axonometric projection view was chosen for each part, but it should be noted that the HLR performance is highly dependent on the projection direction. The performance tests were done in the following computing environment:

CPU: Intel(R) Core(TM) i7-10870H CPU @ 2.20GHz RAM: 32GiB Machine Type: Notebook OS: Windows 10, x64.

For punctual tests on Linux, the following computing environment has been used:

CPU: Intel(R) Core(TM) i9-12900HX RAM: 32GiB Machine Type: Notebook OS: Ubuntu 22.04.3 LTS

In the following plots, the vertical axis is the elapsed time (in seconds), and the horizontal axis denotes the sequential number of a test. The test cases have been chosen quite randomly, but most of them are typical machining parts with somewhat canonical geometries. No tricky shapes have been used, no turbine blades, no molded pieces, etc. We took only the pieces that had been milled, turned, laser-cut, or bent with conventional machines.

SET 1: HLR vs DHLR on CAD files representing tubes and profiles (click to enlarge). |

The illustration above shows comparable performance of the exact and discrete algorithms on a collection of profile-like geometries (steel tubes, aluminum profiles). The graph has unpleasant spikes, indicating the tool's increasing appetite in some cases. Those spikes look hypothetically dangerous, and, as the following tests would reveal, they mostly correspond to situations when the algorithm's performance stops being acceptable. Therefore, we intend to set an execution time limit for both HLR and DHLR so that the algorithms do not run indefinitely. In the image below, this time limit is represented by a dashed horizontal line and set at 0.5 seconds.

SET 2: HLR vs DHLR on CAD files representing sheet metal parts (click to enlarge). |

Already here, for a number of bent and rolled parts, we can see how badly the precise HLR may behave, although the DHLR is not affected as much. Still, the detected spikes overlap, i.e., both algorithms have difficulties with the same cases.

SET 3: HLR vs DHLR on CAD files representing lathed parts with optional milling features (click to enlarge). |

SET 4: HLR vs DHLR on CAD files representing milled parts (click to enlarge). |

You may also want to check out the global view on the HLR vs DHLR comparison on more than 3400 solids.

Timeout or a sad story about abandoned threads

OpenCascade algorithms do not include a timeout mechanism, and that's completely fine. You would not expect to find logic like this in a geometric kernel, as it's a little bit out of its scope. To implement such logic, the following ideas can be used:

- Let's give HLR and DHLR their separate CPU threads. The main thread can then block while waiting for the issued thread for a prescribed number of milliseconds.

- Since it's not easy to terminate a constructed thread, let's just abandon it if the timeout is exceeded. The thread might finish up some time in the future, but we won't care.

Abandoned threads are like prodigal sons of your main process. They left, but at some point in the future, they may come back into their worker functions and mess everything up. Therefore, it is generally a good idea to keep track of abandoned threads and not let them update the shared data. The list of abandoned thread IDs can be stored in a concurrent set which allows for being accessed and edited in parallel mode.

|

To create a thread, the following standard OS functions are normally used:

- CreateThread() on Windows.

- pthread_create() on Linux.

It takes nothing to run a thread, especially if you aren't going to design something like a thread pool or a "task stealing" mechanism. Back in the day, I would have preferred to use TBB or perhaps Qt threads for that type of work, but more dependency on third-party libraries does not make me happy anymore. Anyway, the problematic thing isn't a thread. It's timeout.

Technically, depending on the operating system, the following functions are of our interest:

- WaitForSingleObject() on Windows. This function perfectly allows passing a timeout value as its second argument, and it works just fine. According to MSDN, this function "waits until the specified object is in the signaled state or the timeout interval elapses." The "signaled" state of a thread (which is yet another OS object identified by a HANDLE) means that the worker thread has finished its execution and returned successfully.

- pthread_timedjoin_np() on Linux. This function is less handy because it does not take a millisecond timeout and instead expects you to calculate the timestamp of when a thread can no longer be joined.

You may want to read more about the comparison between Windows and Linux threads in the paper by Eduard Trunov. It's a well-written introduction to the subject; however, it lacks information on how to properly compute timeouts on Linux. And that's probably something you wouldn't want to figure out on your own. If so, continue reading.

OpenCascade comes up with a wrapper abstraction over a thread object, which is the OSD_Thread class. The only purpose of this shallow wrapper is to unify the interfaces to Windows and posix threads, making them easy to use in cross-platform apps. However, the implementation of a timeout for posix threads is sloppy. Here is the thing. In the timespec structure, we must increment the current time by seconds and nanoseconds. If nanoseconds are added without taking special care to prevent overflow into seconds, timeout logic won't function properly (at least on my modern Linux laptop). Our custom timespec_add_ns() function would accurately increment the timing:

/*

* The following code has been taken from https://gist.github.com/BinaryPrison/1112092

* for "fast add nanoseconds to timespec structure."

*/

static inline uint32_t __iter_div_u64_rem(uint64_t dividend, uint32_t divisor, uint64_t *remainder)

{

uint32_t ret = 0;

while (dividend >= divisor) {

/* The following asm() prevents the compiler from

optimising this loop into a modulo operation. */

asm("" : "+rm"(dividend));

dividend -= divisor;

ret++;

}

*remainder = dividend;

return ret;

}

#define NSEC_PER_SEC 1000000000L

static inline void timespec_add_ns(struct timespec *a, uint64_t ns)

{

a->tv_sec += __iter_div_u64_rem(a->tv_nsec + ns, NSEC_PER_SEC, &ns);

a->tv_nsec = ns;

}

The reworked version of OSD_Thread accommodating the patch above has been published as our asiAlgo_Thread class, so you may want to check it out.

Discussion and criticism

The following graph represents the HLR performance over sheet metal cases with an enforced timeout of 0.5 seconds. If the projection algorithm exceeds the limit, it gets abandoned and its results are ignored.

Even more representative results are obtained for the dataset with initially larger spikes. The exact HLR has had an appetite to consume more than 60 seconds of computation, but now it's limited in time, and the worst case is around 0.5–0.6 seconds, which is pretty much our timeout. But there is no magic here. We did not optimize the algorithm; we did not make HLR better; rather, we abandoned its results! The absolute worst-case scenario is that both HLR and DHLR exceed the time limits, in which event no results can be returned. These are rare situations, though (such cases would require a timeout increase).

SET 3: HLR vs DHLR on CAD files representing lathed parts with optional milling features and a timeout of 0.5 sec (click to enlarge). |

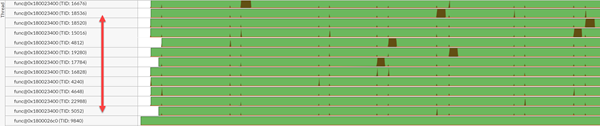

Abandoned threads, although functioning, do not appear to be the best architectural solution ever. It could be better to use the "task stealing" design pattern for parallel computations. In such a paradigm, you have a pool of once-allocated threads (as objects in the operating system), where each thread takes a new chunk of data once the last portion of the job is done. Such an approach would not result in a vast number of OS threads allocated here and there, and you would achieve a more balanced computation regime than the one shown below:

|

Numbers and numbers of worker threads created during the application uptime. |

Another issue with HLR is the fact that it sometimes crashes your application. On Windows, you can effectively surround every HLR and DHLR invocation with try-catch, but this will not save you on Linux. The issue is that SIGSEV segmentation fault signals (sometimes triggered by HLR/DHLR) are not adequately caught, even with all of these OSD::SetSignal() and mysterious OCC_CATCH_SIGNALS instructions. The only approach I can think of here is to effectively patch the corresponding code in OpenCascade, which is not easy to do. Partly due to the objective algorithm's complexity, but also because you wouldn't want to wait another year or two for someone to look into your fix. This isn't meant to blame OCC for anything, just to state the truth about things. Should HLR be forked to unlock its evolution? Quite frankly, yeah. Of course.

But let's wrap it up. Parallel execution with a timeout is useful for overcoming bad algorithm performance. This technique is effective in HLR but can also be used for Boolean operations or OpenCascade's Defeaturing algorithm. To limit the execution time of an algorithm, we run a thread and wait for it to join within a prescribed timeout. If the execution time exceeds the limit, we do not interrupt the working thread but simply stop waiting for its execution. Although a worker thread may last indefinitely, we still allow it to waste our CPU resources since we do not have real control over what's being done inside. We assume that if a desperate computation takes an infinite amount of time, it must be fixed, either in the OpenCascade library or by custom patches. Just consider it another workaround for computations that are beyond your control.

Want to discuss this? Jump in to our forum.